Last month, I attended a talk as part of the excellent Federation Presents series. This one invited Sara Wachter-Boettcher, author of Technically Wrong, as a keynote speaker for a talk entitled "Toxic Tech".

I've since read her book (thanks, Harriet!), and felt really inspired to look at what some of her ideas might mean for us developers. I highly recommend reading it! Truly ideas worth spreading.

There are so many interesting avenues of thought in this topic it is hard to know where to start. So what we'll do in this blog is simply look at 4 important examples.

We're Responsible For What You Build

Yes! We are. We are responsible for everything that we do, whether we are being paid to do it or not.

No. That does not mean we need to quit your jobs and sacrifice our income and livelihood on ethical grounds over a refusal to create a slightly biased UX.

What it should mean is that we are cognizant of what we are doing (or not doing), and take risk proportional to our positions and circumstances to make suggestions about what our product, team, design, testing processes - whatever - could be doing better.

Ethical, Responsible Development

Should What's Default Be Default?

Did you know that office temperatures are significantly more likely to be set at a man's comfort level?

Defaults directly inform what we perceive to be "normal" versus what we perceive to be "different". We're also psychologically predisposed to value whatever is default more highly.

I borrow an interesting example from Wachter-Boettcher's book to illustrate.

In 2015, a high-school pupil named Madeline Messer was perturbed when she asked her female friend why she wasn't using a female avatar in her online game. Her friend replied that a female avatar simply wasn't available. You have to admire what Messer did next. With the permission of her parents, she downloaded, the top fifty "endless runner" games from the iTunes store. She found that 9 of them had non-gendered avatars. Of the remaining 41, 40 offered a male options, but only 23 offered a female option. It gets worse. Male characters were free in 90%, with females characters free in just 15%.

That's pretty poor. Now, nobody's saying that it's the fault of the developer who coded it, or the graphics designer who created them visually, or the product designer who conceived them.

What we can do is not make these kind of mistakes ourselves.

"Edge Case People"

"We can't cater to everyone!"

Whilst that's probably true, that is no excuse for not considering what kinds of people might use our tech.

Last year, a video of a black man trying to get soap out of a soap dispenser went viral.

If you have ever had a problem grasping the importance of diversity in tech and its impact on society, watch this video pic.twitter.com/ZJ1Je1C4NW

— Chukwuemeka Afigbo (@nke_ise) August 16, 2017

Afigbo's caption says it all.

I am regularly frustrated with forms that insist my last name, Ng, is invalid because it's too short. It's frustrating for people who don't identify as women or men when those are the only two available options on a form. It's frustrating when you get an email encouraging men to buy gifts "for her" (who says it's a "her"? Who says there's anyone at all?).

"But it's no big deal!"

No. It's no big deal just to think about all kinds of people who use your tech, and avoid forcing people to mould themselves to the way you want them to be. These little things add up, and over a lifetime, they get to you. You're the odd one out. You're different. Ironically, the people who think it isn't a big deal are often those whose who have scarcely been on the receiving end, whose understanding is limited by their ability to imagine it.

Todd Rose has spared no effort in his life's work, shutting down this notion of average. Watch 1:00-2:00 if nothing else.

Edge cases make complete sense when we are referring to the features of a product.

But nobody should ever be describing a person as an edge case.

Ugly UX

There's a few nasty UX habits creeping up around the web. Let's look at one of them - "confirm shaming". It looks like this...

...and this...

So now, if you don't download a PDF, you make bad choices? Not nice, particularly on a website relating to health..! And apparently if we don't learn Italian, we're going to get an invitation to a funeral of a bird who died from eating a poisoned loaf of bread, which will be entirely our fault. Ouch!

Don't knowingly implement dark UX without a question mark to the person who wants the dark UX. Get clued up on what to look for and what to avoid. Understand the dark side of UX. Don't spread it.

AI & Machine Learning

With the rise of technologies like Deep Cognition and Tensorflow, it's easier than ever to build AI with good intentions that reinforces our existing biases.

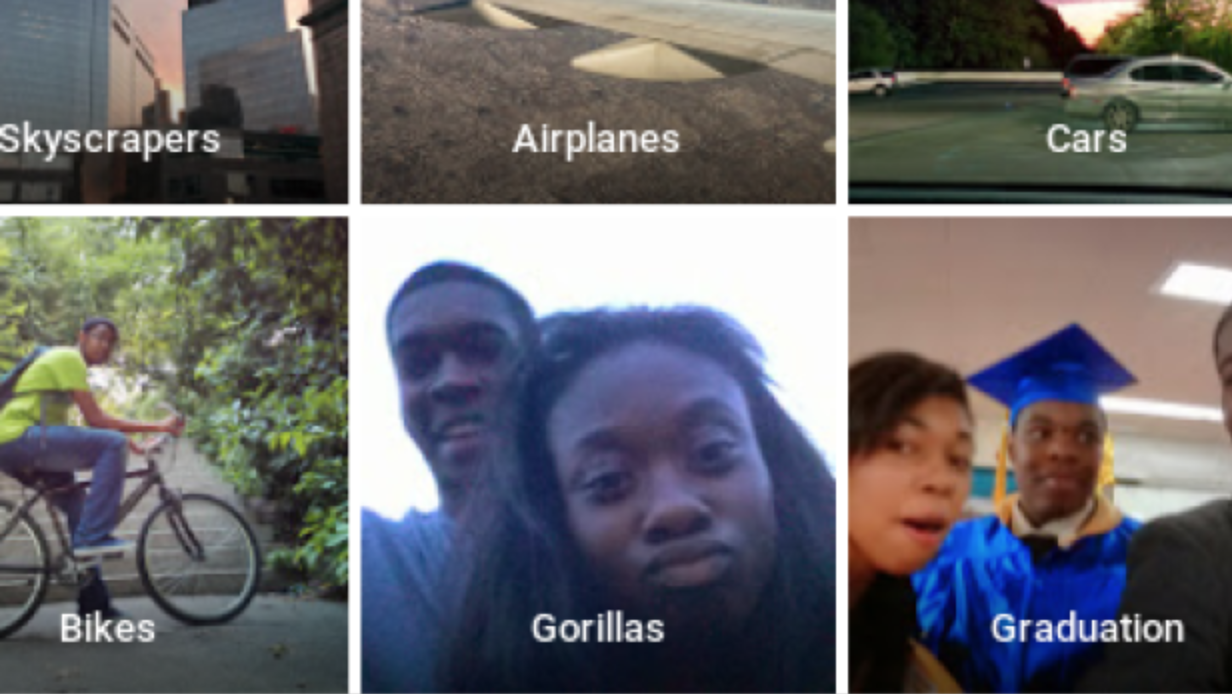

In her talk Toxic Tech, Wachter-Boettcher described the story of Jacky Alciné, a black man who had some photos of himself and a friend he was with autotagged by Google Photos AI as gorillas. Yes... That's right. It caused an outrage, of course, and Google apologised.

The software looked at millions of images pre-release and learned what they were. The problem was that the software was well-trained with plenty of whiter-skinned faces, but not anywhere near as many darker-skinned faces.

It's more important than ever that when we're writing algorithms, teaching a machine learning algorithm, building AI, that we assess possible biases and feed it (as far as possible) unbiased, representative data, and watch carefully for undesired behaviour.

Tech For Good

There's always going to be more that we can do to build tech for good. We only looked at four examples here, and I think we've only scraped the surface, so I'd love to hear your ideas. Say hello @RuthYMNg!

I'm excited about this, so check back here for some more blogs on these topics soon!

Time to make thoughtful tech!