A little SEO knowledge goes a long way for a web developer.

SEO, or Search Engine Optimisation, is all about helping the right users find your site by being visible in search results.

And SEO is most successful when marketers and developers work together. While marketers will often be best-placed to have SEO knowledge for marketing performance, they'll often not have the technical knowledge to know how to implement it.

This is where developers come in. Knowing SEO basics helps developers understand what marketers are trying to do and suggest effective solutions. They can identify issues that marketers might miss.

I more or less started my career as a front-end software developer. One way or another, I ended up transitioning through technical growth marketing into being a full-time marketer. I've worked through some interesting SEO scenarios with dev teams and often talk to devs about SEO. So I wanted to share my perspective in the hope you'll find it useful.

What is SEO, and where do devs come in?

SEO is all about the process of optimising your site in order to rank as highly as possible in search engines.

To do that, we have to convince search engines like Google or Bing that we're worthy of that high ranking.

We can do this convincing it through technical means (like site structure and site speed) and creative means (like writing great content and link building).

Web developers should think about SEO as as an integral part of both their process and their responsibility.

That doesn't mean being an SEO expert! But there's still a significant role to play in SEO – there are some things, particularly architectural and technical choices, which often only devs can implement and are best-placed to understand.

I think there are three parts to this:

- Collaboration with marketing: Marketers can set strategy and deal with the creative elements of SEO. Developers can deal with technical elements and spot problems arising. The best dev teams confidently make architectural SEO choices, offer technical guidance and spot potential problems or omissions in marketers' briefs.

- Proactive SEO practice: SEO is baked into the process and everyday practices of successful developers and engineering teams. They incorporate SEO considerations from the start.

- Technical implementation: They translate SEO strategies into technical realities. They understand how architectural and strucutral decisions impact SEO.

But what do devs really need to know about SEO? That's what we'll cover for the rest of this post, and I've broken it down into 5 big topics, starting with...

1. Every site should have these two things

First, a robots.txt

Your robots.txt file tells search engine robots how to crawl your site.

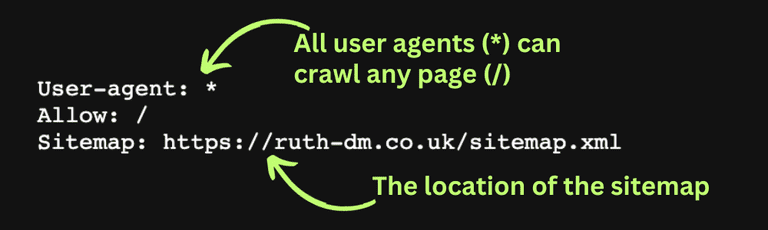

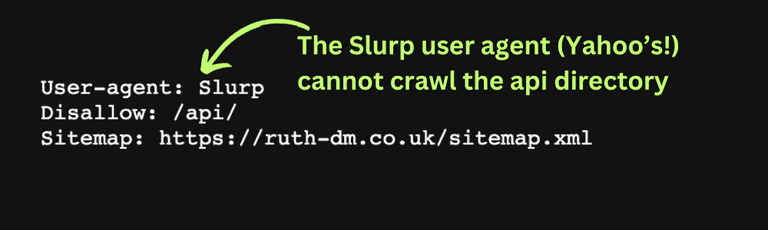

In particular, it'll tell them which parts to crawl, and which parts not to crawl. Here's Moz's guide, and a simple example...

A simple robots.txt

Here's a different example, where we're telling Yahoo's user agents (Slurp) not to crawl the API:

In practice, you'll find this useful to prevent crawling of pages that search engines aren't meant to access, like those meant for internal testing (like staging environments), admin-only areas or sensitive content.

How to do it:

- Put your

robots.txtfile in the root directory, and name it exactly that (it's case sensitive). - Subdomains need separate

robots.txtfiles. - Include the location of your sitemap.

Second, a sitemap

Your sitemap is a file that helps search engines discover your pages. There's not really any need to get into the technical stuff, but in short, Google uses your sitemap to learn about your pages, learn which ones are important and download them quickly. Here's Google's guide to sitemaps

How to do it:

- First, decide which pages to include. Collaborate with your marketers.

- There are other formats, but XML sitemaps are the most versatile.

- You can test your sitemap by uploading it to the Search Console Sitemaps testing tool.

- Submit your sitemap to Google directly in the Search Console.

- And add it to your robots.txt to help search engines find it.

Things to know:

- If you use a CMS, it might create your sitemap for you!

- Different search engines work in different ways, and they won't always "obey" your sitemap. Think of it more like a request to the search engine.

Takeaway – Make sure your site has a robots.txt hosted in the root directory that points to a sitemap

Useful tools:

- List of sitemap generators

- Google Search Console for monitoring, maintaining, and troubleshooting your site's presence on Google

2. Structuring a site

You'll probably get regular requests for new pages and directories from marketers, and making sure it's all structured in a sensible way is a joint effort.

Takeaway: URLs should be intuitive, readable and reflect the content of the page.

As a marketer, I love it when developers ask good questions about this. Challenging a structural change or string that hasn't been thought out is a great way to make sure the site gets extended in a sustainable, user-friendly way.

Is the change going to scale? I used to work at a coding school, and we offered one course on coding, so we stuck the course page on our root domain (provider.com/our-course/) – but we wanted to offer many courses in many categories, each of which would eventually have their own parent pages! So it might have been better to build it like this: provider.com/courses/coding/our-course.

Is the URL readable and relevant? Long URLs aren't great for users. Check your marketing team has considered keywords (though we don't want keyword stuffing!) For example, provider.com/courses/coding/coding-for-beginners is going to help users understand that this page is about a coding course for beginners and be more likely to rank. There might be a very small SEO benefit from the search engine too, but this is kinda mysterious. I quite often see URLs like site.com/marketing/lead-capture/whitepaper-campaign-2024 – these don't send a great message to users, so avoid this.

Things to know

- Search engines read hyphens as spaces, so use hyphens, not underscores

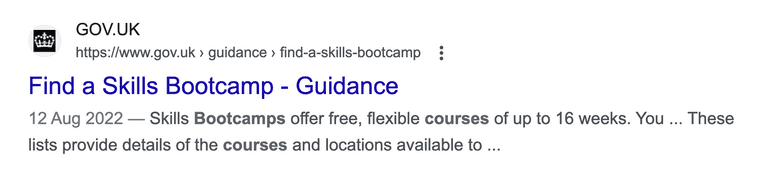

- At the time of writing, URL paths are visible to users on Google in breadcrumb style

Breadcrumbs ("guidance, find-a-skills-bootcamp") from the URL in Google

3. What good site hygiene looks like

It's a team effort between devs and marketers to maintain the technical health of our website.

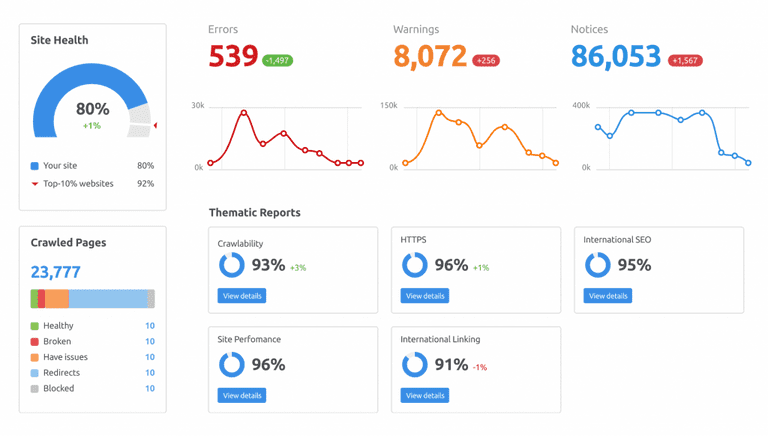

It's a good idea for both marketers and developers to audit site health. Each will have their own expertise and knowledge, and know when to raise different types of problems.

I suggest running a regular audit. Some of the best tools will automate this for you, and send the audit to your inbox. My favourite tool is SEMrush's site audit. You can schedule audits, and it'll group problems into errors (do something now!), warnings and notices. It kind of does your homework for you, because it'll explain exactly what the problem is and where, without you needing pre-existing knowledge.

SEMRush's site audit dashboard

A note about response codes

Response codes matter, so I reckon it's worth an aside to cover the big three...

- 200 (OK) – Make sure all your pages return this by default, unless there's a reason.

- 404 (Not Found) – Broken links? Fix 'em. They impact your site's credibility in search engines' eyes (and are rubbish for users, too.)

- 301 (Permanent redirect) - This is crucial when we change the URL of pages, because it points the "SEO juice" from the old page to the new page. We call that link equity. If we don't do this, we lose all the ranking power from the old page!

Takeaway – Audit your site and fix errors that get flagged.

Useful Tools:

4. Building pages search engines (and humans) love

Developers are going to be the ones leading the way on some aspects of SEO-friendly site-building.

And, sometimes, us marketers will send briefs which might not consider or cover some SEO best practices. Front-end developers should be there to stop things falling through the cracks.

Mobile-first indexing

Developers need to know that Google now uses mobile-first indexing. In other words, what's on your mobile site predominantly determines how Google indexes and ranks you.

Use the Page Experience tool in Search Console to check how Google sees your pages.

These days, having different content rendering on mobile vs desktop isn't generally a good idea.

Speed is key

A slow-loading website is a surefire way to turn off both potential customers and search engines. Page speed is a critical factor in SEO rankings and user satisfaction.

Site speed is a big topic (for a more thorough overview, here's the Moz guide), but here are some of the headline principles:

- Leverage browser caching.

- Minify your HTML, CSS and JavaScript files. Many frameworks let you do this out of the box, or have plugins to handle this.

- Optimise images (tools below). On average, unoptimised images make up 75% of of web page weight!

- Avoid render-blocking JavaScript.

- Make use of Content Delivery Networks (CDNs).

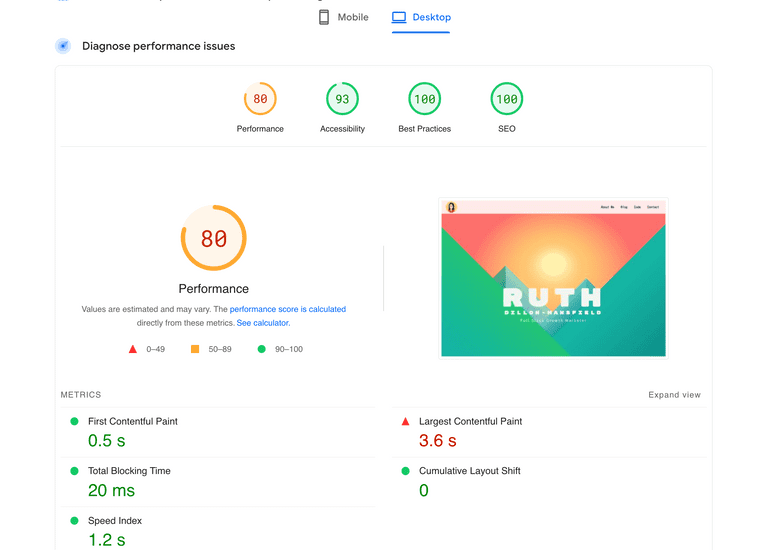

Check your site's speed with Google PageSpeed Insights.

Google Pagespeed Insights

Metadata devs should implement by default

Site metadata tells search engines about your pages. Every marketing page needs:

- A title tag – displayed on search engine results pages (SERPs) and browser tabs. Max 60 chars.

- A meta description – short text summaries which display on search engine results pages (SERPs). Max 160 chars.

- A canonical tag - tells search engines which versions of pages are the "main" or "canonical" page, which should be index. Prevents competing with yourself (a big example is if you have a

www.subdomain!) - A meta viewport tag - tells the search engine how to render and display a page on different devices.

- A meta robots tag - tells the search engine whether a page should be indexed or followed.

- Open graph tags - these tell social media sites like Facebook and LinkedIn what to display when you share a link to your page. I wrote about these in detail here.

There are more! But these are the ones I think devs should be implementing by default. Your marketers might request others. In general, less is often more. Here's what your page might look like if you include these:

<title>My Page's Title</title>

<meta name="description" content= "An amazing description all about my page that makes users who see it in the search engine results understand what the page is about and want to click." >

<link rel=“canonical” href=“https://example.com/original-page-url/” />

<meta name="viewport" content="width=device-width, initial-scale=1">

<meta name="robots" content="follow, index, max-snippet:-1, max-video-preview:-1, max-image-preview:large"/>

<!-- Open Graph -->

<meta property="og:title" content="My Title" />

<meta property="og:description" content="My Description" />

<meta property="og:image" content="https://photo-url.com" />

<meta property="og:url" content="https://mywebsite.com/my-project" />

<meta property="og:site_name" content="my website" />

Devs should know some simple things about headings

Headings and SEO are a marketer's area of knowledge (more here), but every front-end dev should know the basics:

- 1

h1tag per page - Use

h2tags to organise sections, andh3s for even more granularity within those sections

Takeaway: The best pages are those built with both search engine guidelines and human user experience in mind

Useful Tools:

- Pagespeed Insights: Google’s PageSpeed Insights let you analyse any page's speed immediately

- Mobile Testing: Google's Lighthouse helps you optimise web pages

- Image optimisation tools: PicMonkey or Adobe's free tool for reducing file sizes. Kraken is a personal favourite of mine that offers slick lossless compression with an API.

5. Static or dynamic? (A common source of enormous, costly mistakes!)

When I first started working with one of my previous employers, the devs were super proud of the site they'd built. They explained how the content dynamically updated in real-time, so all the different elements of a page were fresh and fetched straight from their individual sources. It was clearly a labour of love. From a technical point of view, I'd have been proud to have created what they had.

But it was a huge head-in-hands moment.

Dynamically generated sites are usually* a huge SEO headache.

*This is sort of nuanced, but it doesn't matter too much here. There are counterexamples where dynamic content doesn't matter (maybe service pages, user comments), but as a rule of thumb, most of your site should probably be static.

Although they're getting better at it, search engines like Google are still simply much better at crawling static sites.

Imagine you're populating your site's <title> tag dynamically on page load. When Google's robots visit for a scrape, there'll be nothing there. So in the Google search results, Google just invents a title, perhaps using your H1 tag.

Statically-served sites have a bunch of other advantages in general. They're much faster (more on this later), caching is more efficient and there's less server load, to name a few.

Another alternative is server-side rendering (SSR), where content is generated on the server and sent to the client as HTML. SSR is a middle ground – it lets us enjoy some of the SEO benefits of static sites, while being able to allow for flexibility and interactivity.

Takeaway – If your site's dynamically rendered, you probably have a project on your hands

Using a CMS for static or server-side rendered sites

You may well already have a Content Management Systems (CMS), especially if you're at a medium or large business. Without one, managing content on static sites can be a pain. A CMS allows your marketers to manage your website's content without needing to manually update code.

A headless CMS provides content as data over an API, which can be integrated with static and server-side rendered sites. They're ideal for capturing the SEO benefits of static sites with the ease of content management. Your marketers will love having a CMS, and if you haven't got a small site, you'll absolutely need one.

Useful tools:

- Static site generators: Gatsby (my personal favourite), Jekyll

- Server-side rendering frameworks: [Next.js] for React apps or [Nuxt.js] for Vue apps

- CMS: Contentful for heavyweight use cases (has version control and much more), Prismic is a fast-growing challenger. Both are great.

That's it (for now)

SEO is an enormous topic. But, honestly, most web developers won't have to worry about getting into the detail - knowing the basics along with some technical best practice is often enough.

It doesn't need to be complicated. But when you're confident in implementing basic best practices by default, spotting problems and fixing issues, you'll find that little bit of knowledge has a big impact (and can increase your stock value on the job market, too).

A little SEO knowledge goes a long way. Promise!

PS. While I know a fair bit about SEO, I'm not an expert – and SEO moves really fast! If you see something that isn't quite right or out of date, or want to share your thoughts, I'd love to hear from you. You can contact me here.